Using Rank Tracker Data In Google Data Studio

When you’re an SEO practitioner, data is your friend. Over the past decade, the team here at AuthorityLabs has strived to simplify the way we

When you’re an SEO practitioner, data is your friend. Over the past decade, the team here at AuthorityLabs has strived to simplify the way we

Search right now is essentially that scene in every natural disaster movie when the seismograph changes from drawing a calm and steady line to spiking

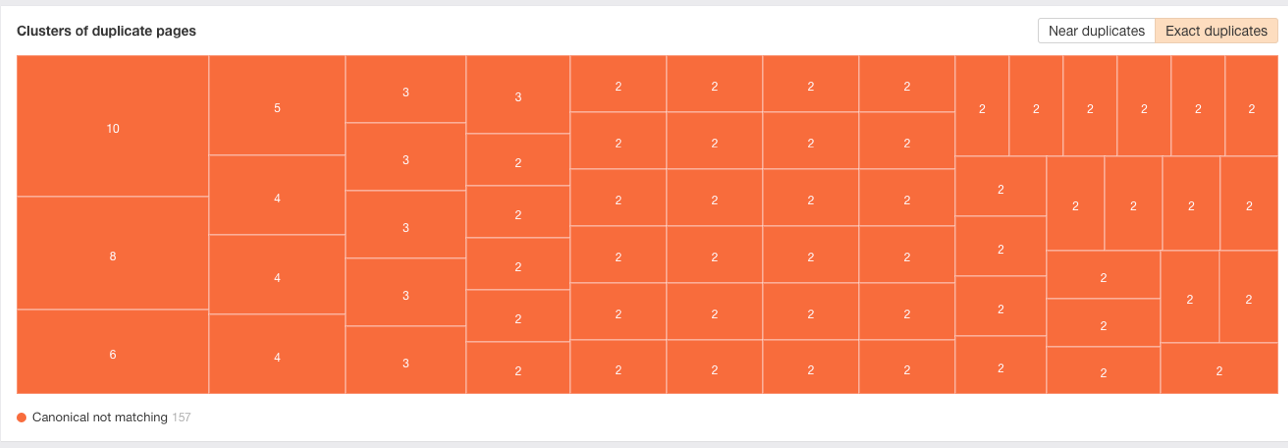

You’ve decided to syndicate your content. Your syndication partners have agreed to add rel=canonical tags back to your original content, so you’ll never have to

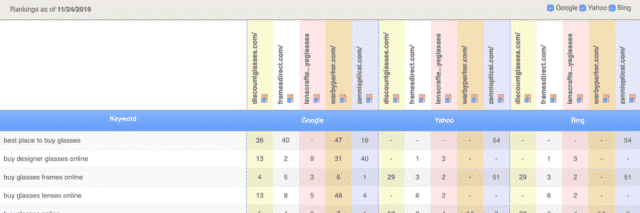

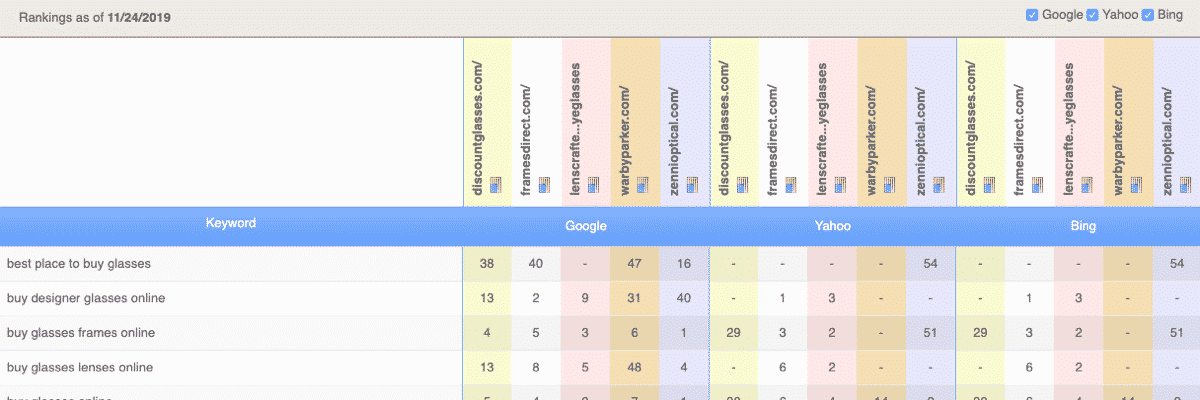

Ranking data is one of the few metrics businesses can measure to monitor how they stack up against the competition in organic search. After all,

Due to the amount of data at our fingertips, these days searchers are looking for local business information in a more unique way than ever

If you’re trying to optimize your website for organic search, tracking your keyword rankings is crucial. Keyword tracking delivers a lot of benefits beyond just

The ability to work with data to assist your SEO efforts has changed over the years. It used to be a serious pain in the

Ranking a website with a single location is one thing, but having multiple sites with multiple locations around the world is a whole other ball

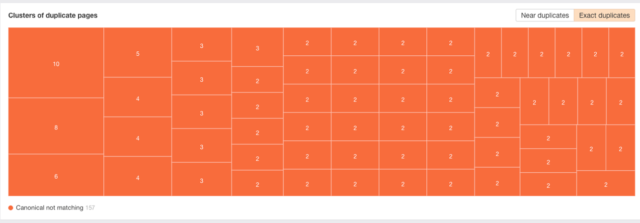

Canonical issues caused by duplicate content are a really common SEO problem for websites. Having identical or very similar content on more than one URL can

I help clients update their old blog posts to regain lost clicks and organic search rankings, so a big part of what I do is

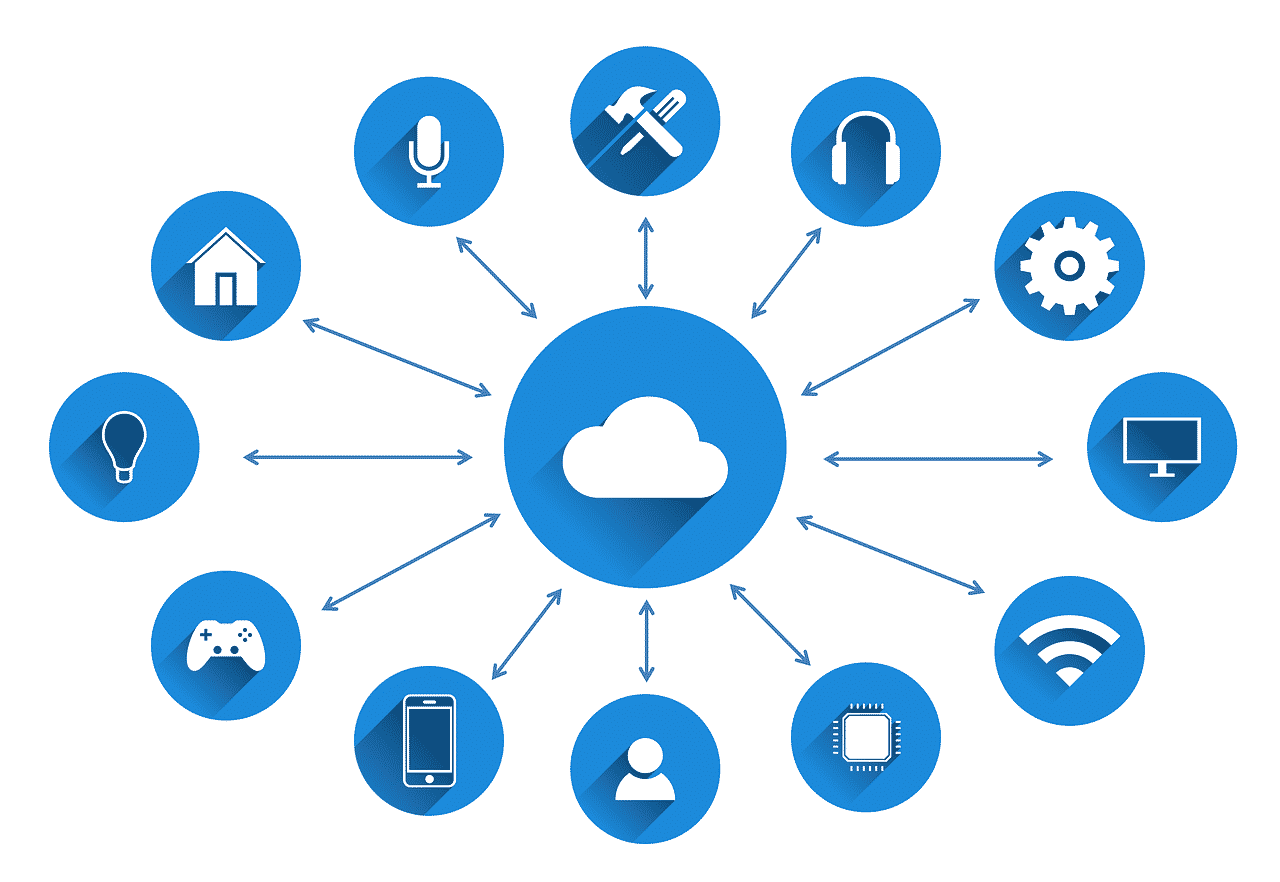

The term artificial intelligence, or AI, may sound intimidating and unfamiliar to most, but we promise that it doesn’t have to be. In fact, the

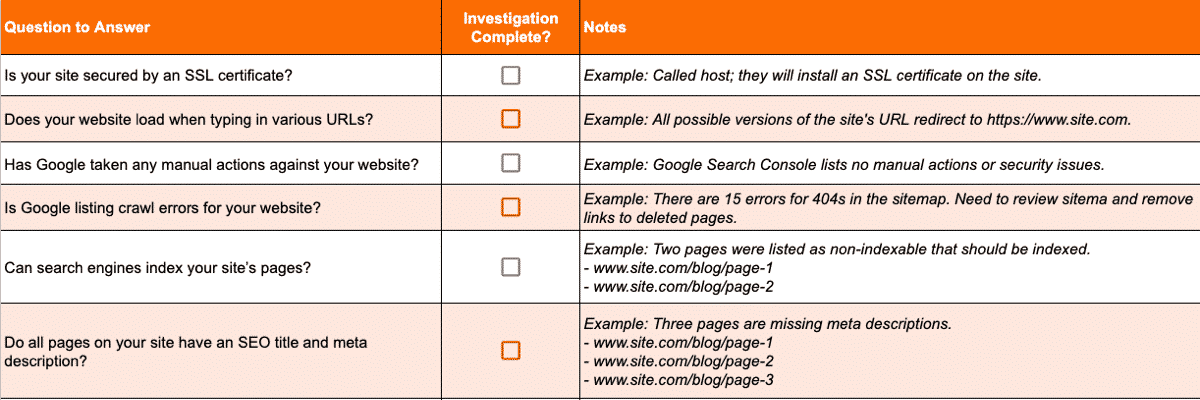

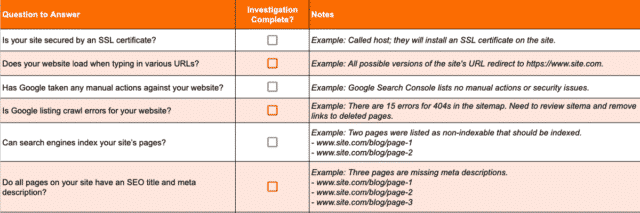

If you’re a small business owner who suspects your website may have issues that are negatively impacting your SEO—but you don’t have the budget to