Knowing how to do a site audit is an extremely useful tool for any marketer. But if you’re like me, intimidation can creep in big time while starting these audits. I’m here to help with that! I pulled several key points from Annielytics’ Site Audit Checklist and from Jeremy Rivera’s Simple DIY Site Audit post. Thank you for the inspiration, guys!

I also use Screaming Frog to crawl the sites and sitemaps. If you haven’t downloaded Screaming Frog… do ett. If you don’t know how to use it, there’s a tutorial for that.

Now, let’s dive in! You can get the full tutorial here:

If you don’t have 10 mins to watch me talk, no problem. Here’s the step by step walk-through:

Audit Magic

Like I said above, this site audit checklist is a dreamboat. Annie Cushing gave it to the marketing industry as a gift and there are about 10 viewers on it at any given time. Craziness.

Yo’ Checklist

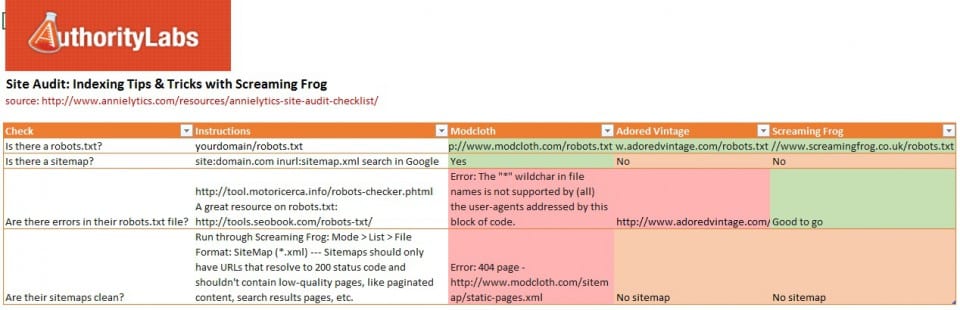

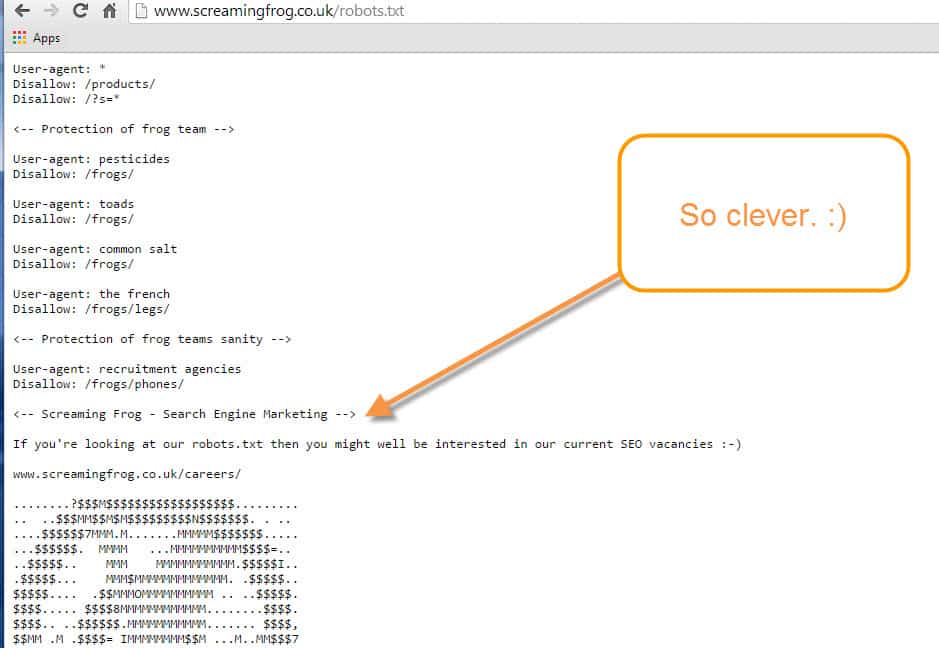

I grabbed a few check list items to walk-through and take the intimidation out of them. I compared a few different sites: Modcloth, Adored Vintage, and (#theoneandonly) Screaming Frog. And I color coded them … duh.

- Is there a robots.txt?

- Is there a sitemap?

- Are there errors in their robots.txt file?

- Are their sitemaps clean?

Want to follow along? Download this audit checklist.

Now that we got that all set up. Let’s get down to business.

Step 1 – Check for Robots.txt

Go to the domain homepage and type in /robots.txt. Not sure what a robots.txt file is?

“A robots.txt file is a text file that stops web crawler software, such as Googlebot, from crawling certain pages of your site. The file is essentially a list of commands, such Allow and Disallow, that tell web crawlers which URLs they can or cannot retrieve. So, if a URL is disallowed in your robots.txt, that URL and its contents won’t appear in Google Search results.” (Google Support)

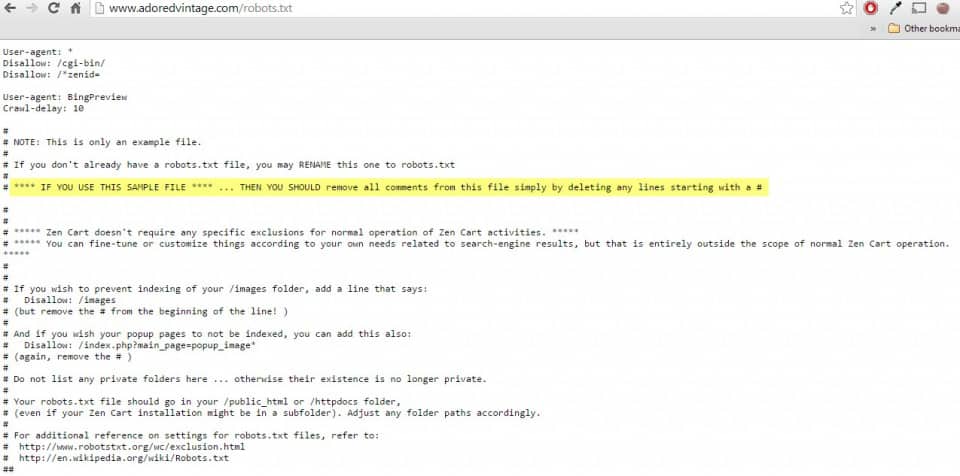

The first site I looked at was Adored Vintage. They had robots.txt, but most of it was copied and pasted from a generator. “If you use this as a sample file then you should remove all comments …” This does cause problems later.

Incorrect:

Correct:

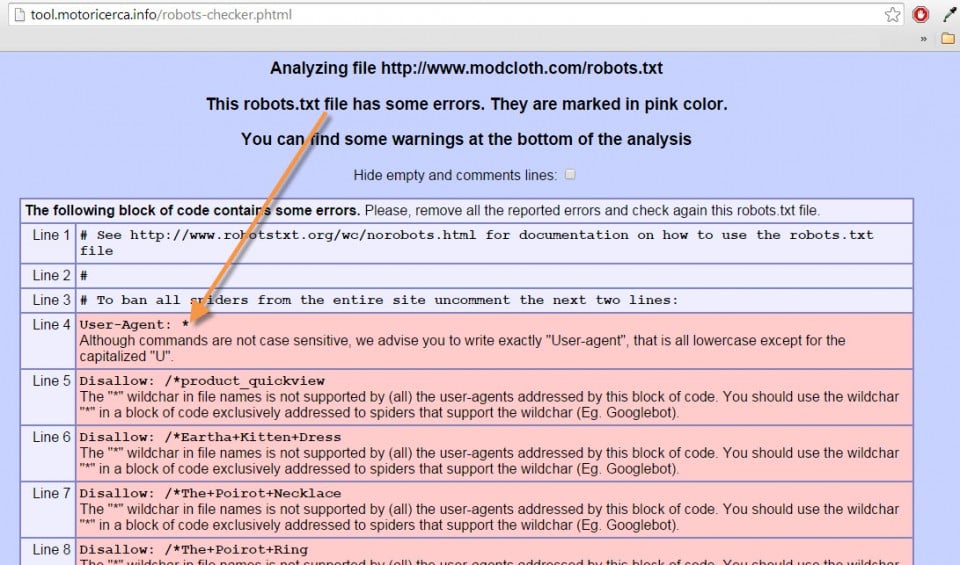

Step 2 – Check for Errors in Your Robots.txt

There are a bunch of guidelines for how to set up the robots.txt file correctly. Now to learn all the rules and implement them… jk. Luckily, there’s a tool for that. I ran this analysis for Modcloth and they had a few mistakes, like using an asterisk, that caused serval errors in their Robots.txt.

Now that you’ve cleaned up your Robots.txt, let’s move onto your sitemap.

Step 4 – Find your Sitemap

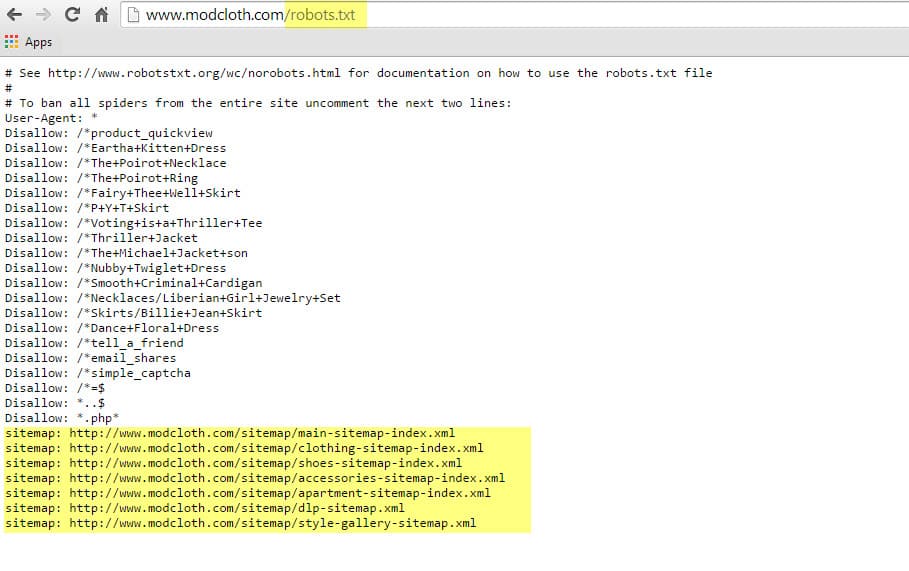

Unlike the Robots.txt file, you can’t just type in www.yourdomain.com/sitemap.xml. Sometimes it’s located under different folders. So a quick find for the sitemap is to search in Google, site:domain.com inurl:sitemap.xml. Here is the one I downloaded from Modcloth.

Your sitemap may (and should) be listed in the robots.txt. Modcloth does a wonderful job with this. They list specific links to there sitemaps, and the first one is the main sitemap.

Do you need a sitemap? Check out a sitemap generator like this one.

Step 5 – Sitemaps in ScreamingFrog

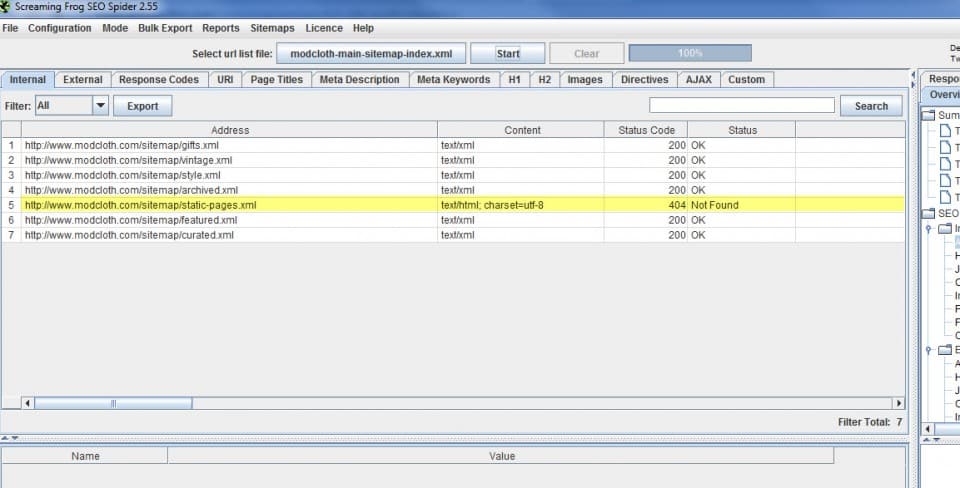

Many times companies will put up a sitemap and not update for months, or even years. Check your sitemap to make sure you’re not linking to any 404 (broken) pages.

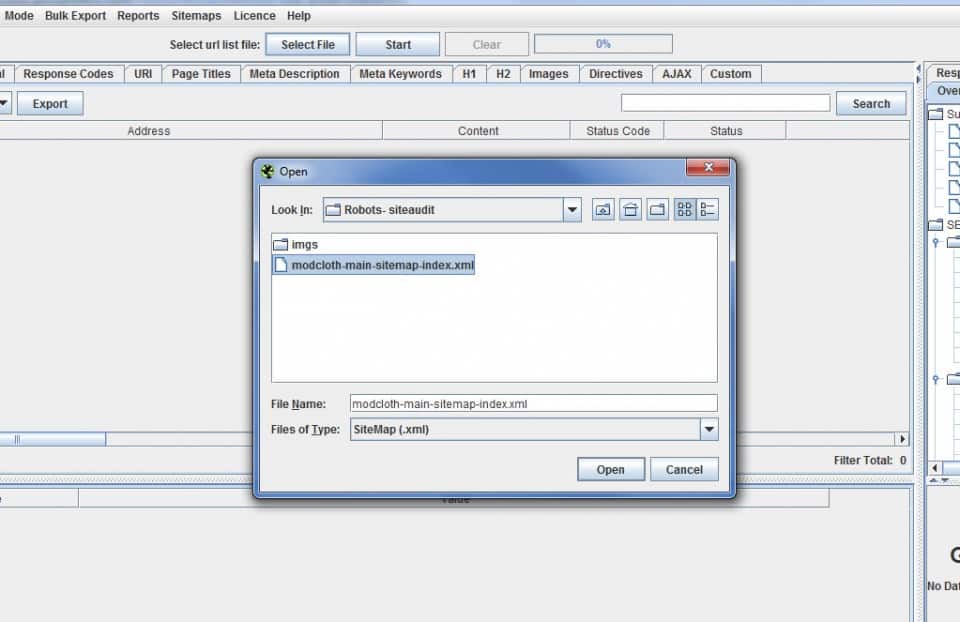

To do this, save off your sitemap.xml file.

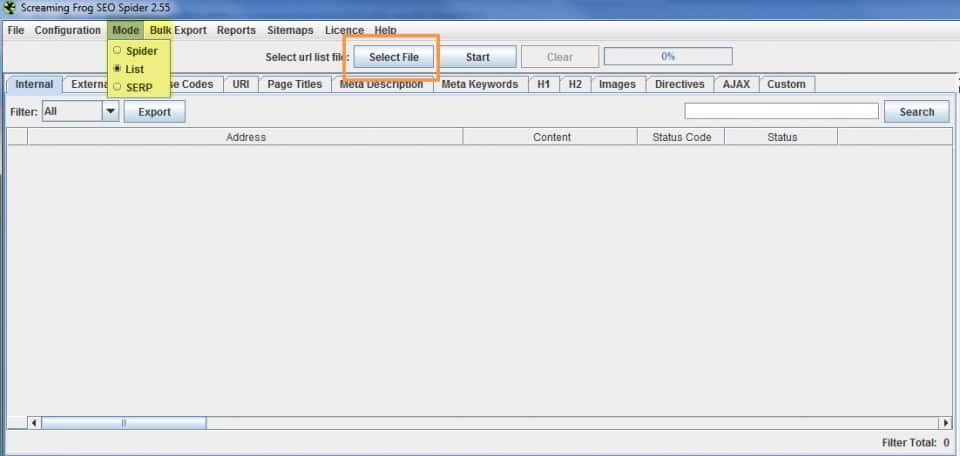

Then, change the Mode > List this will change ScreamingFrog from an online scraper to a file scraper. Cool, right?

Select file > File type> .xml

Modcloth has a a 404 page in there main sitemap. That’s telling Google to check out a broken page, that’s definitely bad for business.

Those are some quick tips to make sure your Robots.txt and Sitemaps are in tip-top=shape. I went over a few other tips in my tutorial video. All tips that can be found in Annielytics’ Site Audit Checklist.

Thanks for tuning in! Now go forth and data!